I think I have some ideas regarding what is the problem with inheritance and, specifically, multiple inheritance. Let's start with geometry.

A plain pedestrian perceives 2-dimensional space as some kind of lawn, where you can walk back and forth, and know your position by measuring the distance towards the fence. (That's like the Bible's universe, except that the Bible universe is 3d, 100 miles by 100 miles by 5 miles.) So people think, aha, I can describe the ball as a point in space, 10 feet from the back fence ('x'), 30 feet from the left fence ('y').

Then 3d comes into the picture. We programmers don't deal with 3d. We deal with element's position in the window. But there's 3d out there. And the third dimension is spectacularly different. If you place a point somewhere, it will fall to the surface. The height from which it falls is called 'z'. It is like the other two coordinates, but it's different, because it falls if you don't hold it. So the point in space is the point on the lawn decorated by the height at which it is currently held. Be real, the force of gravitation will drag it to the surface anyway, it is not natural for an average point to have a height.

In your code you write it like this:

class Point {

double x;

double y;

}

class ThreeDPoint extends Point {

double z;

}

The software design purists tell us that we should write

class ThreeDPoint {

Point point;

double z;

}

This way we won't be mixing the two kinds of points and, say, checking whether an instance of ThreeDPoint lies between two Points... even equality checking may be problematic, where the Point(1, 2).equals(ThreeDPoint(1, 2, 3)) but not vice versa. Josh Bloch told us in his books that equality should be symmetrical.

I don't know if you read in some other book that it does not make any sense to define equality for objects of different types. Let me try to show why. You have one function that takes user name and returns user age (doing some magic, like guessing that a Sue should be over 70 and a Ryan should be under 35); the other function takes an integer number and returns its decimal representation. Can you seriously discuss the idea of checking whether these two function can have a common value for a certain argument? (In a sense they actually can: a one-year-old Japanese boy named Ichi, for instance).

But let's return to the three-dimensional space. Now that we have successfully (double-successfully, since we have two solutions) defined a three-dimensional point, let's thing about rotating the coordinates. Not that we do it here on our flat Earth, but on the space station they do it all the time. Have you heard, they have no gravitation! There's no special 'z'; all three dimensions are made equal. And the idea that a three-dimensional point is some kind of extension of the idea of a two-dimensional point does not fly in space. What do you think is the location of, say, Mars? What is the height of Mars? Mars does not have height. Even our Earth does not have height.

To switch from one coordinate system to another we need to do what? Shift and rotate. Now it turns out that the three dimensions are made equal; the space is (actually it is not, but that's the latest news from astrophysics) homogeneous and isotropic (or else we would be able to save the energy, according to a certain theorem).

Our representation of a 3-d point as a decoration of a 2-d point just does not work.

For a mathematician I'll express it like this: R

3 is isomorphic to Rx(R×R) but not equal. See the difference? For a programmer, it looks like this:

(x, y, z) can be modeled as (x, (y, z)), or as ((x, y), z), or as (y, (z, x)), or as ((z, 42), (y, 55, x)) - but they are all different things. Only gravitation was forcing us, earthlings, to think of (x, y, z) as of ((x, y), z).

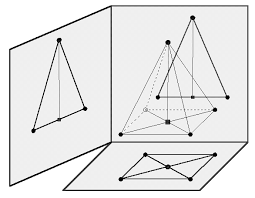

There are relations between 2d and 3d, though. A 3d point can be

projected to 2d. (x, y), and (x, z), and (y, z) are all projections to the appropriate planes.

But what I wanted to say is this. If you are thinking of decorating your class with some more attributes, think again. Probably what you actually need is another class with natural projections (they are called views in SQL) to your old class. We tend to think that some attributes are more "external" than the "internal" attributes. Say, an object id is in the heard of your design, right? Are objects "decorations" of their ids? We don't think so, do we?

Oh, and about the "diamond inheritance problem". Do you know how relational databases solve this problem? Check it out. It's called "normalization".

the pyramid here is neither a square nor a triangle. But when projected, it can be percieved as one. If we say "he's a soldier", it does not mean that's all the person is; what we mean is just a projection.

the pyramid here is neither a square nor a triangle. But when projected, it can be percieved as one. If we say "he's a soldier", it does not mean that's all the person is; what we mean is just a projection.